最近开始搞 tidb,苦于没有多的设备,只好单机来模拟生产环境的集群,本地环境配置如下:

1 Linux tihtap 5.15.0-308.179.6.el9uek.aarch64 #2 SMP Wed Apr 23 10:55:03 PDT 2025 aarch64 aarch64 aarch64 GNU/Linux

操作系统:Oracle Linux 9.5 ;IP:10.0.0.15 ,内存 24G,CPU 4核,arm 架构,配置文件:topo.yaml,完全安装官方,至少修改了IP,tidb 版本 v7.1.3

服务器需要 证书登录,通过 -i指定证书。

本操作完全按照 https://docs.pingcap.com/zh/tidb/v7.1/quick-start-with-tidb/#在单机上模拟部署生产环境集群

配置文件topo.yaml信息:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 # # Global variables are applied to all deployments and used as the default value of # # the deployments if a specific deployment value is missing. global: user: "tidb" ssh_port: 22 deploy_dir: "/tidb-deploy" data_dir: "/tidb-data" # # Monitored variables are applied to all the machines. monitored: node_exporter_port: 9100 blackbox_exporter_port: 9115 server_configs: tidb: instance.tidb_slow_log_threshold: 300 tikv: readpool.storage.use-unified-pool: false readpool.coprocessor.use-unified-pool: true pd: replication.enable-placement-rules: true replication.location-labels: ["host"] tiflash: logger.level: "info" pd_servers: - host: 10.0.1.1 tidb_servers: - host: 10.0.1.1 tikv_servers: - host: 10.0.1.1 port: 20160 status_port: 20180 config: server.labels: { host: "logic-host-1" } - host: 10.0.1.1 port: 20161 status_port: 20181 config: server.labels: { host: "logic-host-2" } - host: 10.0.1.1 port: 20162 status_port: 20182 config: server.labels: { host: "logic-host-3" } tiflash_servers: - host: 10.0.1.1 monitoring_servers: - host: 10.0.1.1 grafana_servers: - host: 10.0.1.1

单独创建tidb用户,并设置密码,安装tiup工具

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 [root@tihtap ~] [root@tihtap ~] Changing password for user tidb. New password: BAD PASSWORD: The password contains less than 1 digits New password: BAD PASSWORD: The password contains the user name in some form New password: BAD PASSWORD: The password contains the user name in some form passwd: Have exhausted maximum number of retries for service [root@tihtap ~] Changing password for user tidb. New password: Retype new password: passwd: all authentication tokens updated successfully. [root@tihtap ~] Last failed login: Sun Nov 16 13:32:04 GMT 2025 from 202.125.94.71 on ssh:notty There were 4 failed login attempts since the last successful login. [tidb@tihtap ~]$ curl --proto '=https' --tlsv1.2 -sSf https://tiup-mirrors.pingcap.com/install.sh | sh % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 4367k 100 4367k 0 0 1759k 0 0:00:02 0:00:02 --:--:-- 1758k Successfully set mirror to https://tiup-mirrors.pingcap.com Detected shell: bash Shell profile: /home/tidb/.bash_profile /home/tidb/.bash_profile has been modified to add tiup to PATH open a new terminal or source /home/tidb/.bash_profile to use it Installed path: /home/tidb/.tiup/bin/tiup =============================================== Have a try: tiup playground =============================================== [tidb@tihtap ~]$ ip -4 a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 2: enp0s6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq state UP group default qlen 1000 inet 10.0.0.15/24 brd 10.0.0.255 scope global dynamic noprefixroute enp0s6 valid_lft 58551sec preferred_lft 58551sec

配置文件topo.yaml和ssh的证书文件root.id.key

1 tiup cluster deploy cjctu v7.1.3 ./topo.yaml --user root -i ./root.id.key

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 [tidb@tihtap ~]$ ll total 8 -rw-------. 1 tidb tidb 2622 Nov 18 07:32 root.id.key -rw-r--r--. 1 tidb tidb 1181 Nov 18 07:31 topo.yaml [tidb@tihtap ~]$ tiup cluster deploy cjctu v7.1.3 ./topo.yaml --user root -i ./root.id.key + Detect CPU Arch Name - Detecting node 10.0.0.15 Arch info ... Done + Detect CPU OS Name - Detecting node 10.0.0.15 OS info ... Done Please confirm your topology: Cluster type : tidb Cluster name: cjctu Cluster version: v7.1.3 Role Host Ports OS/Arch Directories ---- ---- ----- ------- ----------- pd 10.0.0.15 2379/2380 linux/aarch64 /tidb-deploy/pd-2379,/tidb-data/pd-2379 tikv 10.0.0.15 20160/20180 linux/aarch64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160 tikv 10.0.0.15 20161/20181 linux/aarch64 /tidb-deploy/tikv-20161,/tidb-data/tikv-20161 tikv 10.0.0.15 20162/20182 linux/aarch64 /tidb-deploy/tikv-20162,/tidb-data/tikv-20162 tidb 10.0.0.15 4000/10080 linux/aarch64 /tidb-deploy/tidb-4000 tiflash 10.0.0.15 9000/3930/20170/20292/8234/8123 linux/aarch64 /tidb-deploy/tiflash-9000,/tidb-data/tiflash-9000 prometheus 10.0.0.15 9090/9115/9100/12020 linux/aarch64 /tidb-deploy/prometheus-9090,/tidb-data/prometheus-9090 grafana 10.0.0.15 3000 linux/aarch64 /tidb-deploy/grafana-3000 Attention: 1. If the topology is not what you expected, check your yaml file. 2. Please confirm there is no port/directory conflicts in same host. Do you want to continue ? [y/N]: (default=N) y + Generate SSH keys ... Done + Download TiDB components + Download TiDB components + Download TiDB components + Download TiDB components - Download pd:v7.1.3 (linux/arm64) ... Done - Download tikv:v7.1.3 (linux/arm64) ... Done - Download tidb:v7.1.3 (linux/arm64) ... Done - Download tiflash:v7.1.3 (linux/arm64) ... Done - Download prometheus:v7.1.3 (linux/arm64) ... Done - Download grafana:v7.1.3 (linux/arm64) ... Done - Download node_exporter: (linux/arm64) ... Done - Download blackbox_exporter: (linux/arm64) ... Done + Initialize target host environments - Prepare 10.0.0.15:22 ... Done + Deploy TiDB instance - Copy pd -> 10.0.0.15 ... Done - Copy tikv -> 10.0.0.15 ... Done - Copy tikv -> 10.0.0.15 ... Done - Copy tikv -> 10.0.0.15 ... Done - Copy tidb -> 10.0.0.15 ... Done - Copy tiflash -> 10.0.0.15 ... Done - Copy prometheus -> 10.0.0.15 ... Done - Copy grafana -> 10.0.0.15 ... Done - Deploy node_exporter -> 10.0.0.15 ... Done - Deploy blackbox_exporter -> 10.0.0.15 ... Done + Copy certificate to remote host + Init instance configs - Generate config pd -> 10.0.0.15:2379 ... Done - Generate config tikv -> 10.0.0.15:20160 ... Done - Generate config tikv -> 10.0.0.15:20161 ... Done - Generate config tikv -> 10.0.0.15:20162 ... Done - Generate config tidb -> 10.0.0.15:4000 ... Done - Generate config tiflash -> 10.0.0.15:9000 ... Done - Generate config prometheus -> 10.0.0.15:9090 ... Done - Generate config grafana -> 10.0.0.15:3000 ... Done + Init monitor configs - Generate config node_exporter -> 10.0.0.15 ... Done - Generate config blackbox_exporter -> 10.0.0.15 ... Done Enabling component pd Enabling instance 10.0.0.15:2379 download https://tiup-mirrors.pingcap.com/pd-v7.1.3-linux-arm64.tar.gz 757.49 KiB / 47.28 MiB 1.56% ? MiB/s Enable instance 10.0.0.15:2379 success Enabling component tikv Enabling instance 10.0.0.15:20162 Enabling instance 10.0.0.15:20160 Enabling instance 10.0.0.15:20161 download https://tiup-mirrors.pingcap.com/prometheus-v7.1.3-linux-arm64.tar.gz 926.70 KiB / 88.15 MiB 1.03% ? MiB/s Enable instance 10.0.0.15:20162 success download https://tiup-mirrors.pingcap.com/prometheus-v7.1.3-linux-arm64.tar.gz 926.70 KiB / 88.15 MiB 1.03% ? MiB/s Enable instance 10.0.0.15:20160 success download https://tiup-mirrors.pingcap.com/pd-v7.1.3-linux-arm64.tar.gz 757.49 KiB / 47.28 MiB 1.56% ? MiB/s Enable instance 10.0.0.15:20161 success Enabling component tidb Enabling instance 10.0.0.15:4000 download https://tiup-mirrors.pingcap.com/prometheus-v7.1.3-linux-arm64.tar.gz 926.70 KiB / 88.15 MiB 1.03% ? MiB/s Enable instance 10.0.0.15:4000 success Enabling component tiflash Enabling instance 10.0.0.15:9000 download https://tiup-mirrors.pingcap.com/pd-v7.1.3-linux-arm64.tar.gz 757.49 KiB / 47.28 MiB 1.56% ? MiB/s Enable instance 10.0.0.15:9000 success Enabling component prometheus Enabling instance 10.0.0.15:9090 download https://tiup-mirrors.pingcap.com/prometheus-v7.1.3-linux-arm64.tar.gz 926.70 KiB / 88.15 MiB 1.03% ? MiB/s Enable instance 10.0.0.15:9090 success Enabling component grafana Enabling instance 10.0.0.15:3000 download https://tiup-mirrors.pingcap.com/pd-v7.1.3-linux-arm64.tar.gz 757.49 KiB / 47.28 MiB 1.56% ? MiB/s Enable instance 10.0.0.15:3000 success Enabling component node_exporter Enabling instance 10.0.0.15 download https://tiup-mirrors.pingcap.com/prometheus-v7.1.3-linux-arm64.tar.gz 926.70 KiB / 88.15 MiB 1.03% ? MiB/s Enable 10.0.0.15 success Enabling component blackbox_exporter Enabling instance 10.0.0.15 download https://tiup-mirrors.pingcap.com/prometheus-v7.1.3-linux-arm64.tar.gz 926.70 KiB / 88.15 MiB 1.03% ? MiB/s Enable 10.0.0.15 success Cluster `cjctu` deployed successfully, you can start it with command : `tiup cluster start cjctu --init` [tidb@tihtap ~]$ tiup cluster start cjctu --init Starting cluster cjctu... + [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/cjctu/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/cjctu/ssh/id_rsa.pub + [Parallel] - UserSSH: user=tidb, host=10.0.0.15 + [Parallel] - UserSSH: user=tidb, host=10.0.0.15 + [Parallel] - UserSSH: user=tidb, host=10.0.0.15 + [Parallel] - UserSSH: user=tidb, host=10.0.0.15 + [Parallel] - UserSSH: user=tidb, host=10.0.0.15 + [Parallel] - UserSSH: user=tidb, host=10.0.0.15 + [Parallel] - UserSSH: user=tidb, host=10.0.0.15 + [Parallel] - UserSSH: user=tidb, host=10.0.0.15 + [ Serial ] - StartCluster Starting component pd Starting instance 10.0.0.15:2379 Start instance 10.0.0.15:2379 success Starting component tikv Starting instance 10.0.0.15:20162 Starting instance 10.0.0.15:20160 Starting instance 10.0.0.15:20161 Start instance 10.0.0.15:20160 success Start instance 10.0.0.15:20161 success Start instance 10.0.0.15:20162 success Starting component tidb Starting instance 10.0.0.15:4000 Start instance 10.0.0.15:4000 success Starting component tiflash Starting instance 10.0.0.15:9000 Start instance 10.0.0.15:9000 success Starting component prometheus Starting instance 10.0.0.15:9090 Start instance 10.0.0.15:9090 success Starting component grafana Starting instance 10.0.0.15:3000 Start instance 10.0.0.15:3000 success Starting component node_exporter Starting instance 10.0.0.15 Start 10.0.0.15 success Starting component blackbox_exporter Starting instance 10.0.0.15 Start 10.0.0.15 success + [ Serial ] - UpdateTopology: cluster=cjctu Started cluster `cjctu` successfully The root password of TiDB database has been changed. The new password is: '5j-dfxxxxxxx^h02*' . Copy and record it to somewhere safe, it is only displayed once, and will not be stored. The generated password can NOT be get and shown again. [tidb@tihtap ~]$

1 2 3 4 5 6 7 8 9 10 11 [tidb@tihtap ~]$ tiup cluster list Checking updates for component cluster... Name User Version Path PrivateKey ---- ---- ------- ---- ---------- cjctu tidb v7.1.3 /home/tidb/.tiup/storage/cluster/clusters/cjctu /home/tidb/.tiup/storage/cluster/clusters/cjctu/ssh/id_rsa [tidb@tihtap ~]$ [tidb@tihtap ~]$ [tidb@tihtap ~]$ tiup cluster list Name User Version Path PrivateKey ---- ---- ------- ---- ---------- cjctu tidb v7.1.3 /home/tidb/.tiup/storage/cluster/clusters/cjctu /home/tidb/.tiup/storage/cluster/clusters/cjctu/ssh/id_rsa [tidb@tihtap ~]$

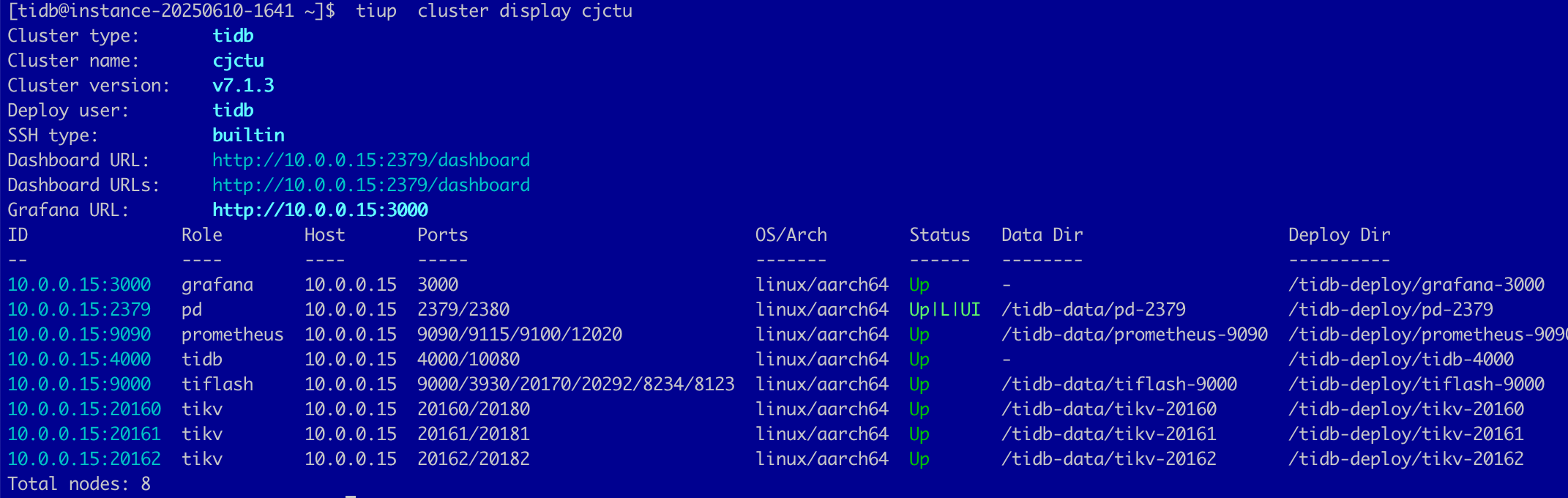

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [tidb@tihtap ~]$ tiup cluster display cjctu Cluster type : tidb Cluster name: cjctu Cluster version: v7.1.3 Deploy user: tidb SSH type : builtin Dashboard URL: http://10.0.0.15:2379/dashboard Dashboard URLs: http://10.0.0.15:2379/dashboard Grafana URL: http://10.0.0.15:3000 ID Role Host Ports OS/Arch Status Data Dir Deploy Dir -- ---- ---- ----- ------- ------ -------- ---------- 10.0.0.15:3000 grafana 10.0.0.15 3000 linux/aarch64 Up - /tidb-deploy/grafana-3000 10.0.0.15:2379 pd 10.0.0.15 2379/2380 linux/aarch64 Up|L|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379 10.0.0.15:9090 prometheus 10.0.0.15 9090/9115/9100/12020 linux/aarch64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090 10.0.0.15:4000 tidb 10.0.0.15 4000/10080 linux/aarch64 Up - /tidb-deploy/tidb-4000 10.0.0.15:9000 tiflash 10.0.0.15 9000/3930/20170/20292/8234/8123 linux/aarch64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000 10.0.0.15:20160 tikv 10.0.0.15 20160/20180 linux/aarch64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160 10.0.0.15:20161 tikv 10.0.0.15 20161/20181 linux/aarch64 Up /tidb-data/tikv-20161 /tidb-deploy/tikv-20161 10.0.0.15:20162 tikv 10.0.0.15 20162/20182 linux/aarch64 Up /tidb-data/tikv-20162 /tidb-deploy/tikv-20162 Total nodes: 8 [tidb@tihtap ~]$

附:AI 总结的 集群信息

TiDB 集群信息详细解析 基于您提供的 tiup cluster display cjctu 输出,我来详细解释这个 TiDB 集群的各项信息。

多端口配置说明 为什么某些组件有多个端口? 不同的 TiDB 组件需要多个端口来处理不同类型的通信和服务:

TiFlash 组件端口详解 (9000/3930/20170/20292/8234/8123):

9000 : TiFlash TCP 服务端口,用于处理主要的数据查询请求3930 : TiFlash RAFT 和 Coprocessor 服务端口,用于数据复制和协处理器通信20170 : TiFlash Proxy 服务端口,作为 TiKV 兼容层20292 : Prometheus 拉取 TiFlash Proxy 指标的端口8234 : Prometheus 拉取 TiFlash 指标的端口8123 : TiFlash HTTP 服务端口(用于某些管理操作)

Prometheus 组件端口详解 (9090/9115/9100/12020):

9090 : Prometheus 主服务通信端口9115 : Blackbox_exporter 通信端口,用于监控 TiDB 集群中的端口状态9100 : Node_exporter 通信端口,用于报告每个 TiDB 集群节点的系统信息12020 : NgMonitoring 服务通信端口

其他组件端口:

PD (2379/2380) : 2379 用于与 TiDB 通信,2380 用于 PD 集群内部节点间通信TiDB (4000/10080) : 4000 是应用和 DBA 工具的通信端口,10080 用于报告 TiDB 状态TiKV (20160/20180) : 20160 是 TiKV 通信端口,20180 用于报告 TiKV 状态

状态指示器含义 Status 列中的不同状态:

Up : 组件正常运行

Up|L|UI

:

Up : 组件正常运行L : Leader 状态(PD 组件中的领导者节点)UI : 提供 UI 界面(PD 组件内置了 TiDB Dashboard)

这些状态信息由 PD 的调度数据分析得出,反映了组件在集群中的实际运行状态和角色。

目录结构说明 Data Dir(数据目录) 存储组件的实际数据文件:

有数据目录的组件 :PD、Prometheus、TiFlash、TiKV 需要持久化存储数据显示 “-“ 的组件 :Grafana 和 TiDB 是无状态组件,不需要持久化数据存储

Deploy Dir(部署目录) 存储组件的二进制文件、配置文件和日志:

所有组件都有部署目录,用于存放程序文件和配置

格式通常为 /tidb-deploy/组件名-端口号

单机部署配置分析 当前配置特点 您的集群采用了单机部署(所有组件都在 10.0.0.15),但配置了 3 个 TiKV 节点 :

tikv-20160

tikv-20161

tikv-20162

与典型 3 节点高可用配置的对比 典型生产环境配置 :

3 个物理节点,每节点部署不同组件

TiKV 节点分布在不同机器上,通过 Raft 共识算法确保高可用

最少需要 3 个 TiKV 节点来满足默认 3 副本的数据复制要求

您的单机配置 :

优势 :满足了 TiKV 最小节点数要求,可以正常运行 Raft 共识风险 :单点故障风险 - 如果这台机器出现问题,整个集群将不可用适用场景 :开发、测试或学习环境

TiUP Cluster 日常维护常用命令详解 TiUP Cluster 是 TiDB 集群管理的核心工具,提供了完整的集群生命周期管理功能。以下是按功能分类的日常维护常用命令:

基本集群管理命令 集群启停操作

tiup cluster start <cluster-name>

组件启动顺序:PD > TiKV > TiDB > TiFlash > TiCDC > Prometheus > Grafana > Alertmanager

支持 -R 参数指定角色,-N 参数指定节点

示例:tiup cluster start test-cluster -R pd (仅启动 PD 组件)

tiup cluster stop <cluster-name>

组件停止顺序:Alertmanager > Grafana > Prometheus > TiCDC > TiFlash > TiDB > TiKV > PD

同样支持 -R 和 -N 参数进行选择性停止

tiup cluster restart <cluster-name>

集群状态查看

tiup cluster list

显示集群名称、部署用户、版本、路径和私钥信息

数据存储在 ~/.tiup/storage/cluster/clusters/ 目录

tiup cluster display <cluster-name>

显示各组件的运行状态、资源使用情况和配置详情

支持 --dashboard、--node、--role、--process、--uptime 等选项

监控和状态检查命令

tiup cluster check <topology.yml | cluster-name>

执行硬件/软件检查,验证部署就绪性

--apply 参数可自动修复发现的问题--cluster 参数检查现有集群--enable-cpu、--enable-disk、--enable-mem 启用性能检查

tiup cluster audit [audit-id]

不指定 audit-id 时显示历史命令列表

指定 audit-id 查看具体命令的执行日志

配置管理命令

tiup cluster edit-config <cluster-name>

使用 $EDITOR 环境变量指定的编辑器(默认 vi)

修改后需要执行 reload 命令生效

tiup cluster reload <cluster-name>

应用配置修改并重启相关服务

遵循升级流程确保集群可用性

扩缩容命令 扩容操作

缩容操作

tiup cluster scale-in <cluster-name> --node <node-id>

多步骤过程:下线服务、更新拓扑、数据清理

TiKV/TiFlash 组件需要额外步骤确保数据一致性

tiup cluster prune <cluster-name>

手动清理缩容后的残留数据和服务

需要在数据调度完成后执行

备份和故障排除命令 备份相关

tiup cluster meta backup <cluster-name> [backup-file]

备份集群管理所需的 TiUP 元数据文件

支持自定义备份文件路径

tiup cluster meta restore <cluster-name> <backup-file>

故障排除

tiup cluster patch <cluster-name> <package-path>

在集群运行时替换服务二进制文件

上传、停止、替换、启动的完整流程

tiup cluster clean <cluster-name>

--data 清理数据目录--log 清理日志目录--all 清理所有数据和日志支持 --ignore-role 和 --ignore-node 排除特定组件

其他维护命令

tiup cluster upgrade <cluster-name> <version>tiup cluster destroy <cluster-name>tiup cluster enable <cluster-name>tiup cluster disable <cluster-name>tiup cluster replay <cluster-name> <audit-id>tiup cluster rename <cluster-name> <new-name>

原文作者:

liups.com

原文链接:

http://liups.com/posts/ab889653/

许可协议: 知识共享署名-非商业性使用 4.0 国际许可协议